Testing antivirus software involves a comprehensive process designed to evaluate its effectiveness in protecting systems from various types of malware and security threats. Here’s an in-depth look at the procedures typically involved:

- Sample Selection: Testers begin by collecting a diverse range of malware samples, including viruses, worms, Trojans, ransomware, adware, and potentially unwanted programs (PUPs). These samples are obtained from various sources, such as malware repositories, captured network traffic, and real-world malware incidents. The goal is to create a representative sample set that reflects the current threat landscape.

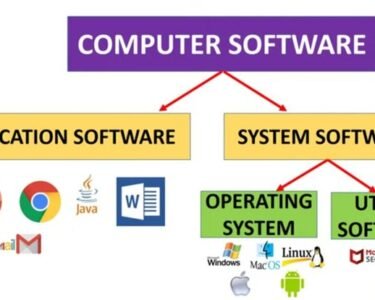

- Test Environment Setup: A controlled test environment is established to simulate real-world computing scenarios. This environment may include virtual machines running different operating systems (e.g., Windows, macOS, Linux) and configurations. Test systems are equipped with the antivirus software being evaluated, as well as common applications and utilities found in typical computing environments.

- Baseline Configuration: Before running any tests, the antivirus software is configured with default settings to reflect the typical behavior of an out-of-the-box installation. This ensures consistency across tests and allows for a fair evaluation of each product’s capabilities.

- Test Execution: Malware samples are systematically introduced to the test environment to assess the antivirus software’s detection and remediation capabilities. Tests may include:

- Static Detection: Each malware sample is scanned using the antivirus software’s signature-based detection engine to determine if it can identify known threats based on predefined signatures or patterns.

- Dynamic Analysis: Malware samples are executed in a controlled environment to observe their behavior and assess the antivirus software’s ability to detect and block malicious activities in real-time.

- False Positive Testing: Legitimate software and files are also scanned to evaluate the antivirus software’s tendency to incorrectly flag them as malicious (false positives), which can disrupt normal operations and productivity.

- Performance Impact: Tests measure the impact of the antivirus software on system performance, including CPU and memory usage, disk I/O, and application responsiveness, to assess its resource consumption and overhead.

- Evaluation Metrics: Test results are analyzed based on various metrics, including:

- Detection Rate: The percentage of malware samples correctly identified by the antivirus software.

- False Positive Rate: The frequency of false alarms generated by the antivirus software when scanning legitimate files and applications.

- Performance Impact: The degree to which the antivirus software affects system performance and responsiveness during normal operation and malware scanning.

- Remediation Effectiveness: The antivirus software’s ability to remove or quarantine detected malware and restore affected systems to a safe state.

- Reporting and Analysis: Test results are compiled into detailed reports that provide insights into the antivirus software’s strengths, weaknesses, and overall effectiveness. These reports may include performance benchmarks, comparative analyses with other products, and recommendations for users and organizations.

- Continuous Monitoring and Updates: Antivirus testing is an ongoing process, as the threat landscape evolves rapidly, and new malware variants emerge regularly. Testers continuously monitor antivirus software vendors’ updates and responses to emerging threats to ensure their products remain effective and up-to-date.

By following these testing procedures, testers can provide valuable insights into the capabilities and performance of antivirus software, helping users and organizations make informed decisions when selecting security solutions to protect their systems and data.